Tutorial

This tutorial describes a basic workflow of using Klever. We assume that you deployed Klever locally on Debian 11 in the production mode with default settings. In addition, we assume that your username is debian and your home directory is /home/debian1.

Preparing Build Bases

After a successful deployment of Klever you need to get a build base2. This tutorial treats just build bases for Linux kernel loadable modules since the publicly available version of Klever has only experimental support for verification of other software. You should not expect that Klever supports all versions and configurations of the Linux kernel well. There is a big list of things to do in this direction.

Below we consider as an example a build base for verification of kernel loadable modules of Linux 5.5.19 (architecture x86_64, configuration allmodconfig). You can download the archive of the target build base prepared in advance from here. Let’s assume that you unpack this archive into directory /home/debian/build-base-linux-5.5.19-x86_64-allmodconfig so that there should be file meta.json directly at the top level in that directory. Besides, you can use in a similar way build bases prepared for:

If you want to prepare the build base yourself, we recommend to do this on the same machine where you deployed Klever since it already contains everything necessary. You can try to execute similar steps for other versions and configurations of the Linux kernel at your own risks. To build other versions of the Linux kernel you may need appropriate versions of GCC as well as other build time prerequisites.

To prepare the target build base from scratch you can follow the next steps:

$ wget https://cdn.kernel.org/pub/linux/kernel/v5.x/linux-5.5.19.tar.xz

$ tar -xJf linux-5.5.19.tar.xz

$ cd linux-5.5.19

$ make allmodconfig

$ source $KLEVER_SRC/venv/bin/activate

$ clade -w ~/build-base-linux-5.5.19-x86_64-allmodconfig -p klever_linux_kernel --cif $KLEVER_DEPLOY_DIR/klever-addons/CIF/bin/cif make -j8 modules

Then you will need to wait for quite a long period of time depending on the performance of your machine (typically several hours). If everything will go well, you will have the target build base in directory /home/debian/build-base-linux-5.5.19-x86_64-allmodconfig.

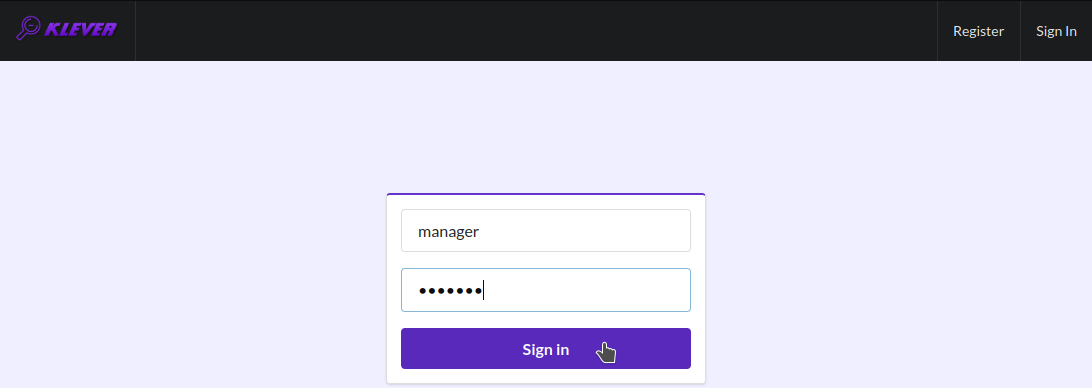

Signing in

Before performing all other actions described further in this tutorial you need to sign in using a Klever web interface:

Open page http://localhost:8998 in your web-browser3.

Input manager as a username and a password and sign in (Fig. 1).

Then you will be automatically redirected to a job tree page presented in the following sections.

Fig. 1 Signing in

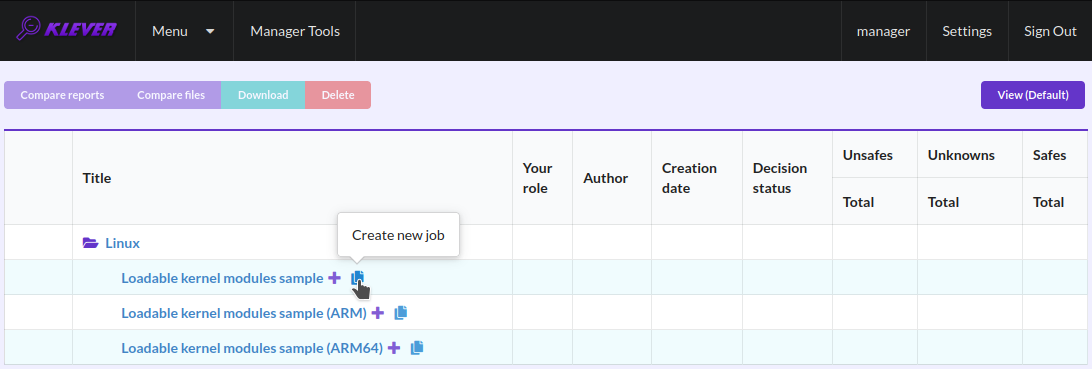

Starting Verification

As an example we consider checking usage of clocks and memory safety in USB drivers. To start up verification you need to do as follows:

Start the creation of a new job (Fig. 2).

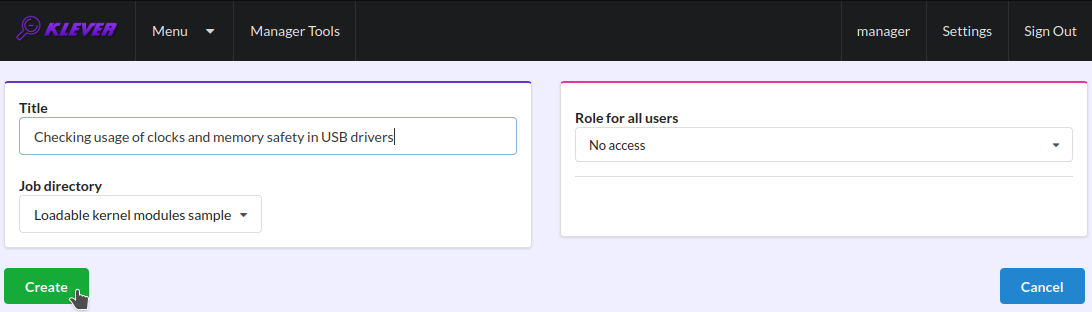

Specify an appropriate title and create the new job (Fig. 3).

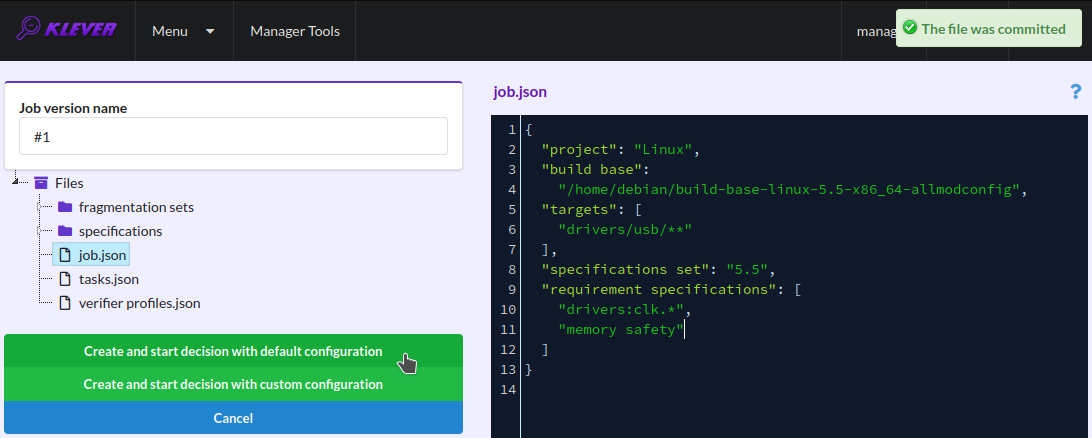

To configure a first job version you need to specify (Fig. 4):

The path to the prepared build base that is /home/debian/build-base-linux-5.5.19-x86_64-allmodconfig.

Targets, e.g. USB drivers, i.e. all modules from directory drivers/usb in our example.

Specifications set 5.5 (you can see a list of all supported specification sets at the end of this section).

Requirement specifications to be checked, e.g. drivers:clk.* and memory safety in our example (you can see a list of all supported requirement specifications at the end of this section).

Press Ctrl-S with your mouse pointer being at the editor window to save changes.

Start a decision of the job version (Fig. 4).

After that Klever automatically redirects you to a job version/decision page that is described in detail in the following sections.

Fig. 2 Starting the creation of a new job

Fig. 3 The creation of the new job

Fig. 4 Configuring the first job version and starting its decision

Later you can create new jobs by opening the job tree page, e.g. through clicking on the Klever logo (Fig. 5), and by executing steps above. You can create new jobs even when some job version is being decided, but various job versions are decided one by one by default.

Fig. 5 Opening the job tree page

Below there are requirement specifications that you can choose for verification of Linux loadable kernel modules (we do not recommend to check requirement specifications which identifiers are italicised since they produce either many false alarms or there are just a few violations of these requirements at all):

alloc:irq

alloc:spinlock

alloc:usb lock

arch:asm:dma-mappingfile:///home/novikov/work/klever/docs/_build/html/tutorial.html#preparing-build-bases

arch:mm:ioremap

block:blk-core:queue

block:blk-core:request

block:genhd

concurrency safety

drivers:base:class

drivers:usb:core:usb:coherent

drivers:usb:core:usb:dev

drivers:usb:core:driver

drivers:usb:core:urb

drivers:usb:gadget:udc-core

drivers:clk1

drivers:clk2

fs:sysfs:group

kernel:locking:mutex

kernel:locking:rwlock

kernel:locking:spinlock

kernel:module

kernel:rcu:update:lock bh

kernel:rcu:update:lock shed

kernel:rcu:update:lock

kernel:rcu:srcu

kernel:sched:completion

lib:find_next_bit

lib:idr

memory safety

net:core:dev

net:core:rtnetlink

net:core:sock

In case of verification of the Linux kernel rather than vanilla 5.5, you may need to change a value of option specifications set when configuring the job version (Fig. 4). Klever supports following specification sets:

2.6.33

3.2

3.14

3.14-dentry-v2

4.6.7

4.15

4.17

5.5

5.17

These specification sets correspond to vanilla versions of the Linux kernel. You should select such a specifications set that matches your custom version of the Linux kernel better through the trial and error method.

Decision Progress

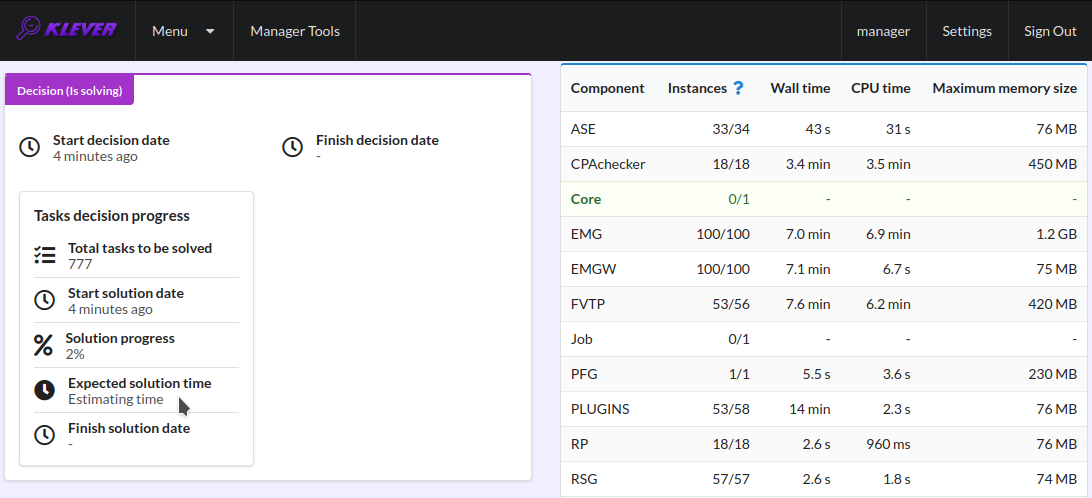

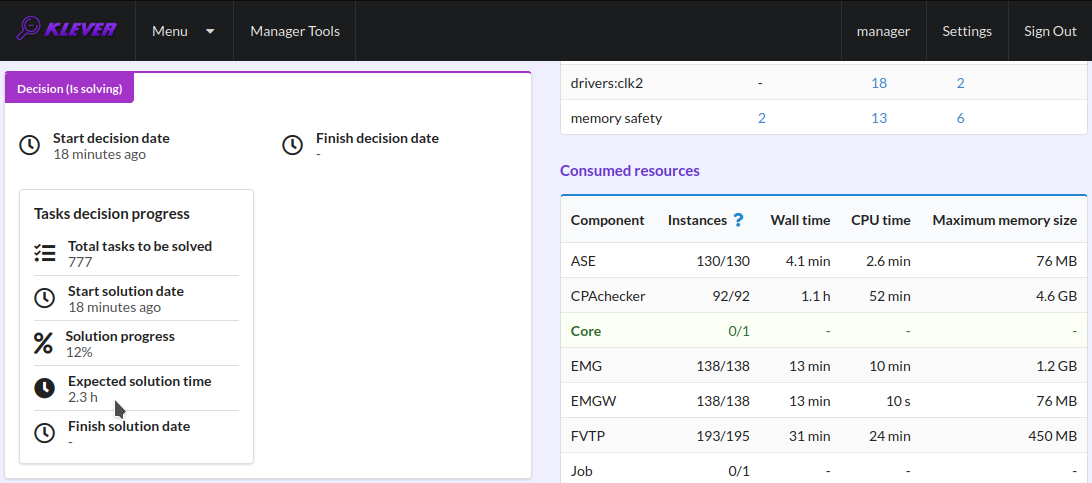

At the beginning of the decision of the job version Klever indexes each new build base. This can take rather much time before it starts to generate and to decide first tasks4 for large build bases. In about 15 minutes you can refresh the page and see results of decision for some tasks there. Please, note that the automatic refresh of the job version/decision page stops after 5 minutes, so you either need to refresh it through conventional means of your web browser or request Klever to switch it on back (Fig. 6).

Fig. 6 Switching on the automatic refresh of the job version/decision page

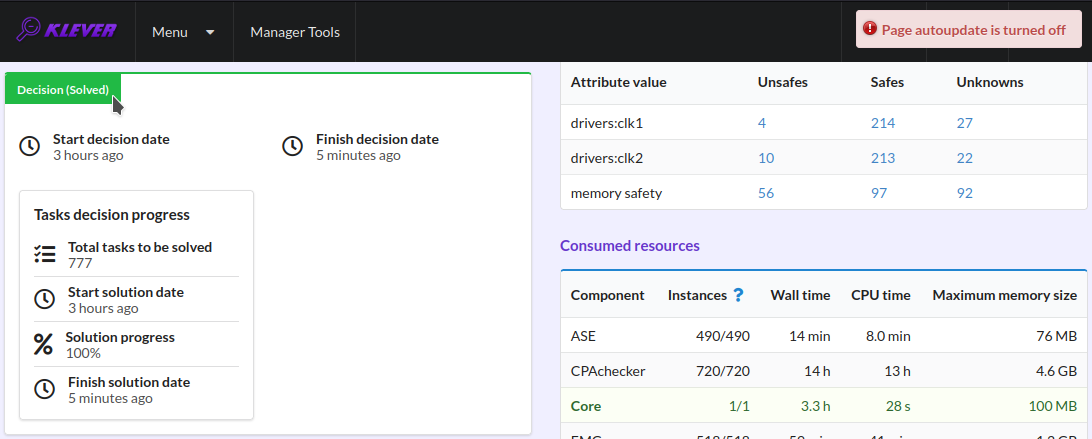

Before the job version is eventually decided Klever estimates and provides a decision progress (Fig. 7 and Fig. 8). You should keep in mind that Klever collects statistics for 10% of tasks before it starts predicting an approximate remaining time for their decision. After that, it recalculates it on the base of accumulated statistics. In our example it takes about 3 hours to decide the job version completely (Fig. 9).

Fig. 7 The progress of the decision of the job version (estimating a remaining time)

Fig. 8 The progress of the decision of the job version (the remaining time is estimated)

Fig. 9 The completed decision of the job version

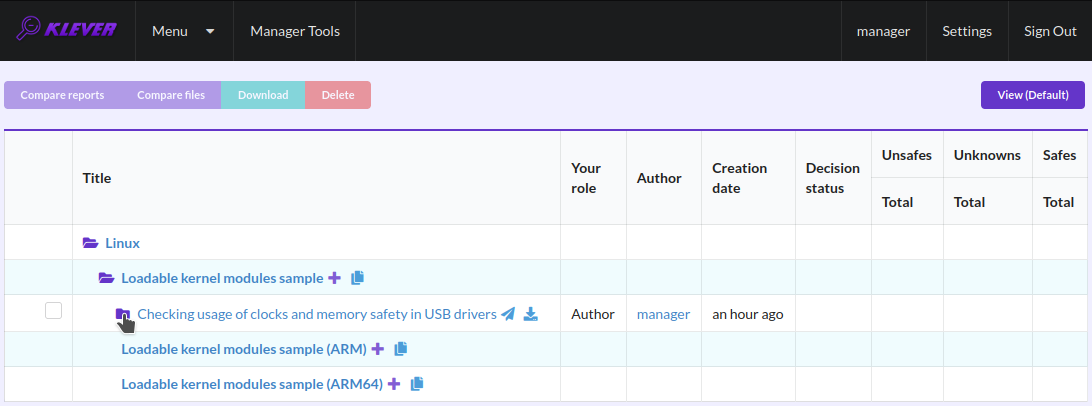

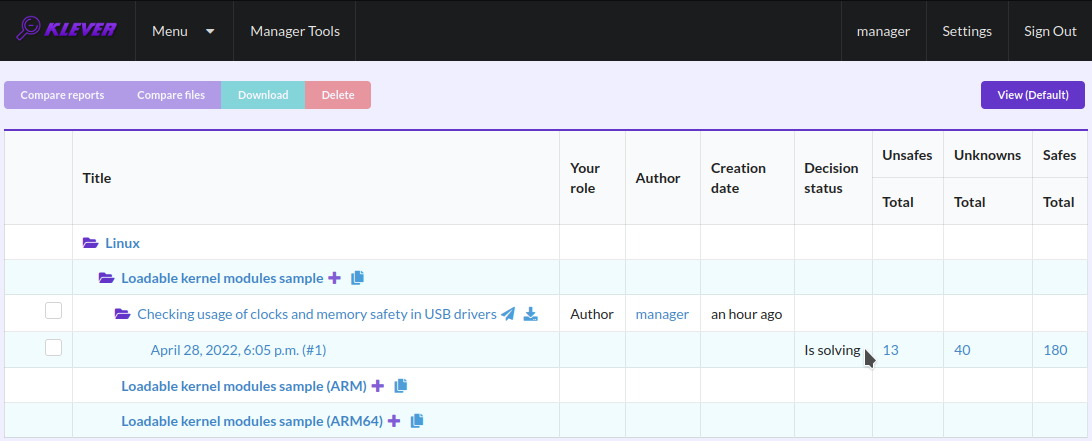

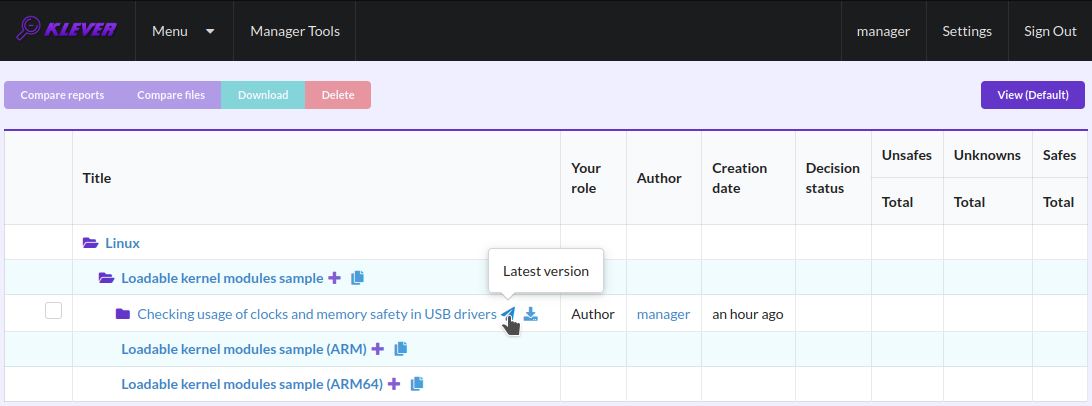

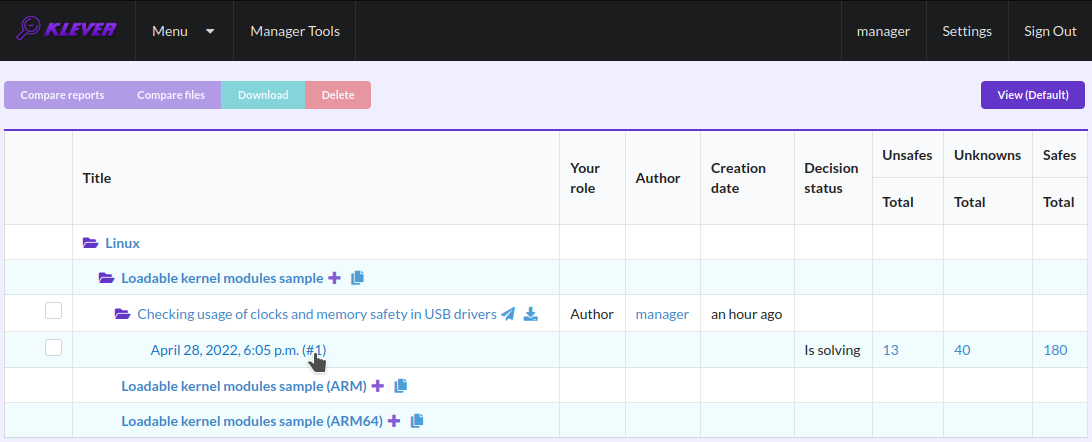

At the job tree page you can see all versions of particular jobs (Fig. 10) and their decision statutes (Fig. 11). Besides, you can open the page with details of the decision of the latest job version (Fig. 12) or the page describing the decision of the particular job version (Fig. 13).

Fig. 10 Showing job versions

Fig. 11 The status of the decision of the job version

Fig. 12 Opening the page with the decision of the latest job version

Fig. 13 Opening the page with the decision of the particular job version

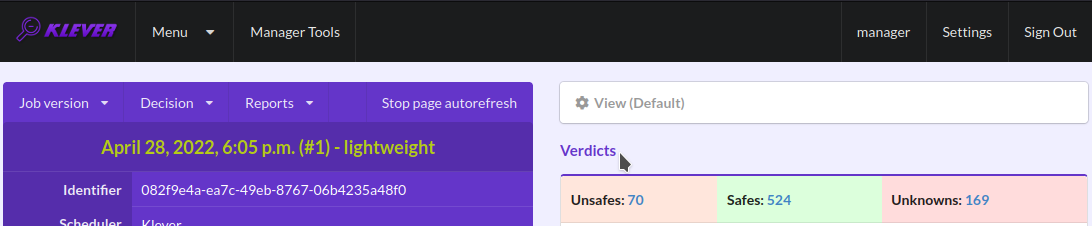

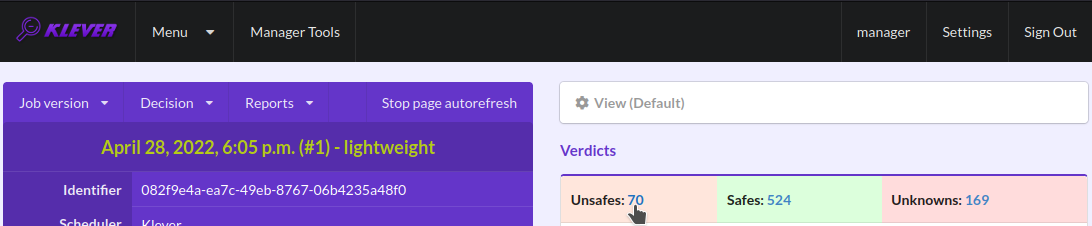

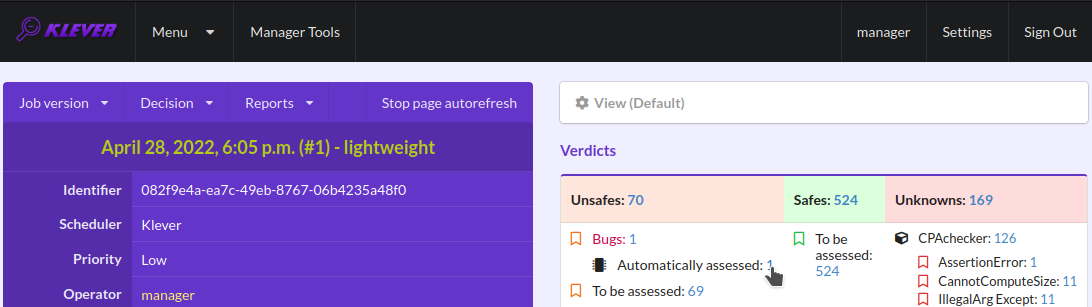

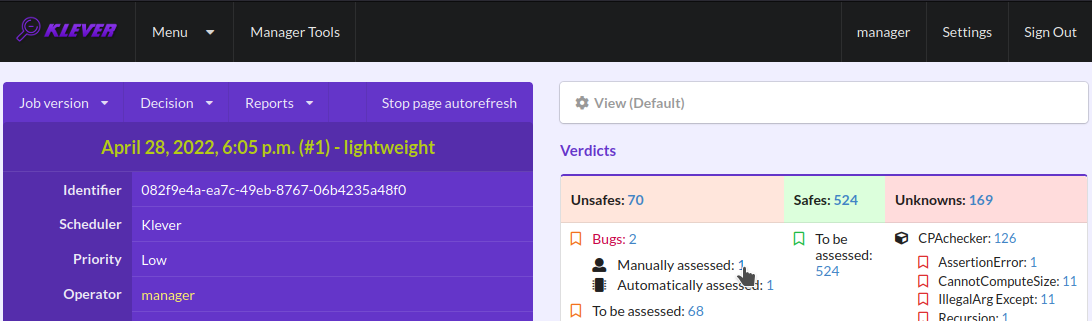

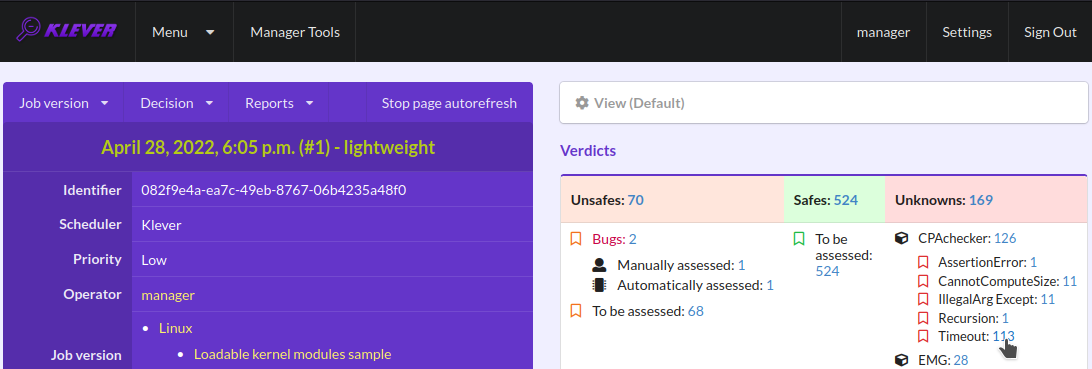

Analyzing Verification Results

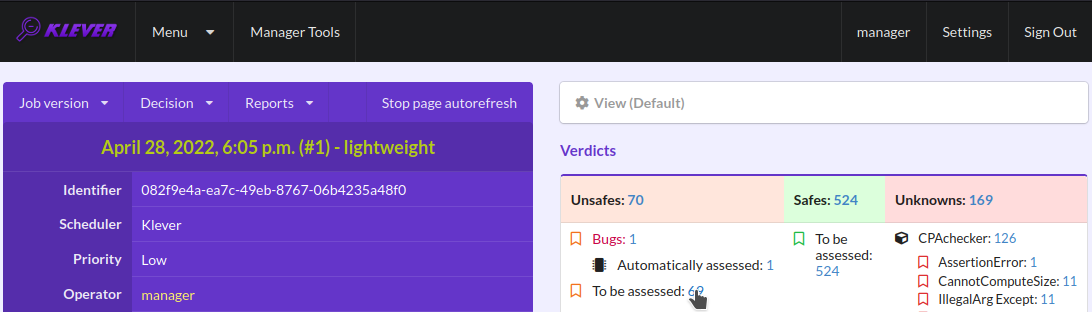

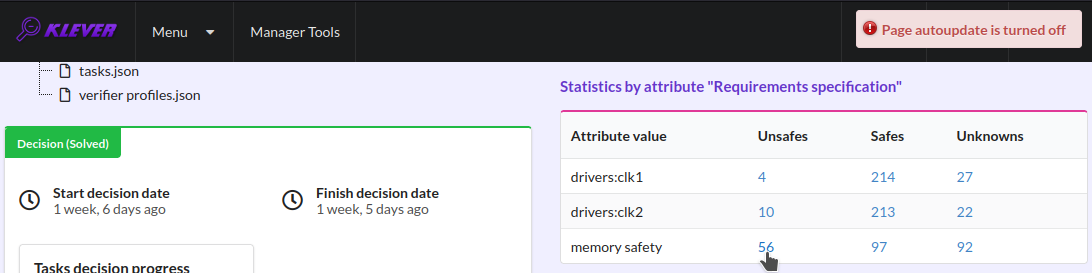

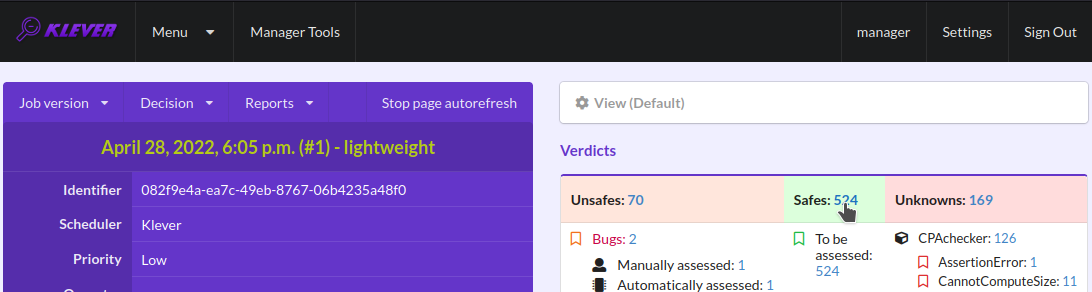

Klever can fail to generate and decide tasks. In this case it provides users with unknown verdicts, otherwise there are safe or unsafe verdicts (Fig. 14). You already saw the example with summaries of these verdicts at the job tree page (Fig. 11). In this tutorial we do not consider in detail other verdicts rather than unsafes that are either violations of checked requirements or false alarms (Fig. 15). Klever reports unsafes if so during the decision of the job version and you can assess them both during the decision and after its completion.

Fig. 14 Verdicts

Fig. 15 The total number of unsafes reported thus far

During assessment of unsafes experts can create marks that can match other unsafes with similar error traces (we consider marks and error traces in detail within next sections). For instance, there is a mark that matches one of the reported unsafes (Fig. 16). Automatic assessment can reduce efforts for analysis of verification results considerably, e.g. when verifying several versions or configurations of the same software. But experts should analyze such automatically assessed unsafes since the same mark can match unsafes with error traces that look very similar but correspond to different faults. Unsafes without marks need assessment as well (Fig. 17). When checking several requirement specifications in the same job, one is able to analyze unsafes just for a particular requirements specification (Fig. 18).

Fig. 16 The total number of automatically assessed unsafes

Fig. 17 The total number of unsafes without any assessment

Fig. 18 The total number of unsafes corresponding to the particular requirements specification

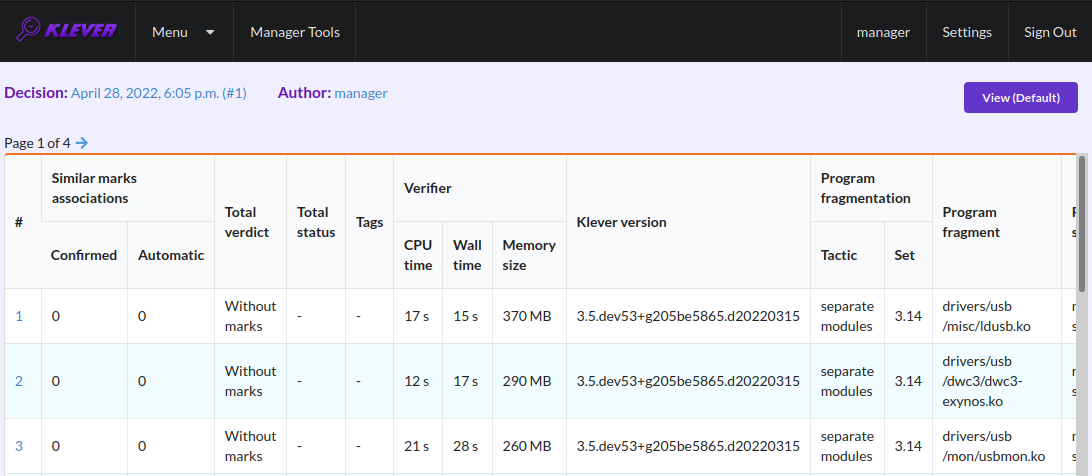

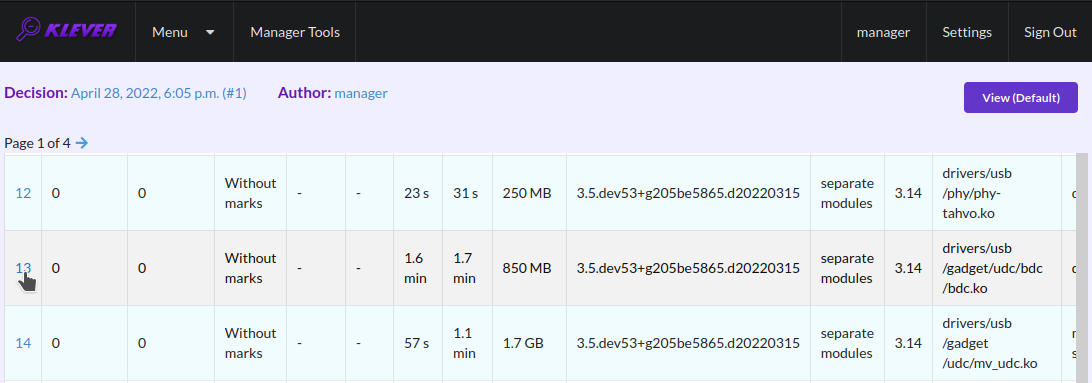

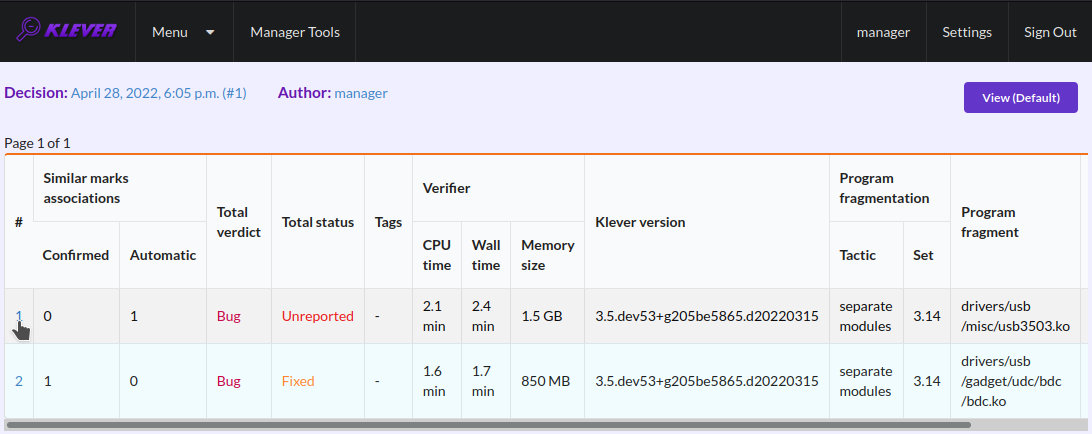

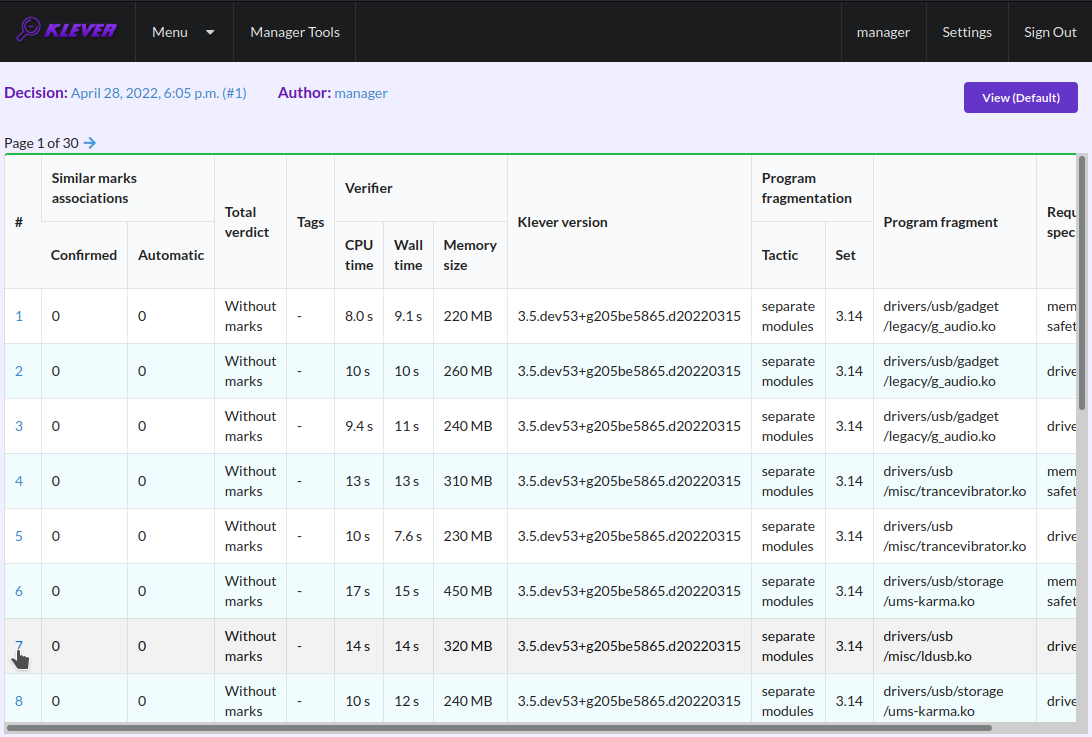

After clicking on the links in Fig. 15- Fig. 18 you will be redirected to pages with lists of corresponding unsafes (e.g. Fig. 19). If there is the only element in such a list you will see an appropriate error trace immediately. For further analysis we recommend clicking on an unsafe index on the left to open a new page in a separate tab (Fig. 20). To return back to the job version/decision page you can click on the title of the job decision on the top left (Fig. 21). This can be done at any page with such the link.

Fig. 19 The list of unsafes without any assessment

Fig. 20 Opening the error trace corresponding to the unsafe without any assessment

Fig. 21 Moving back to the job version/decision page

Analyzing Error Traces

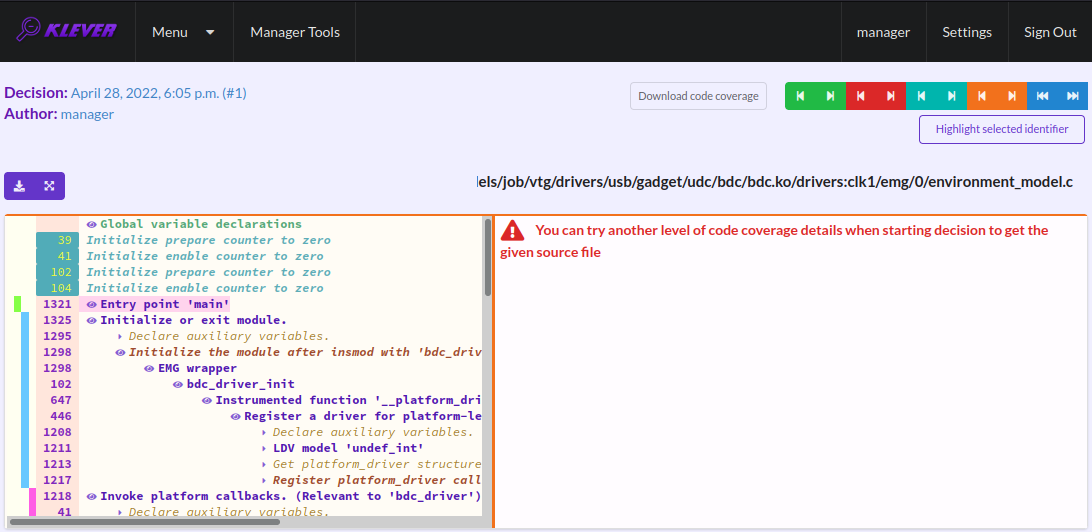

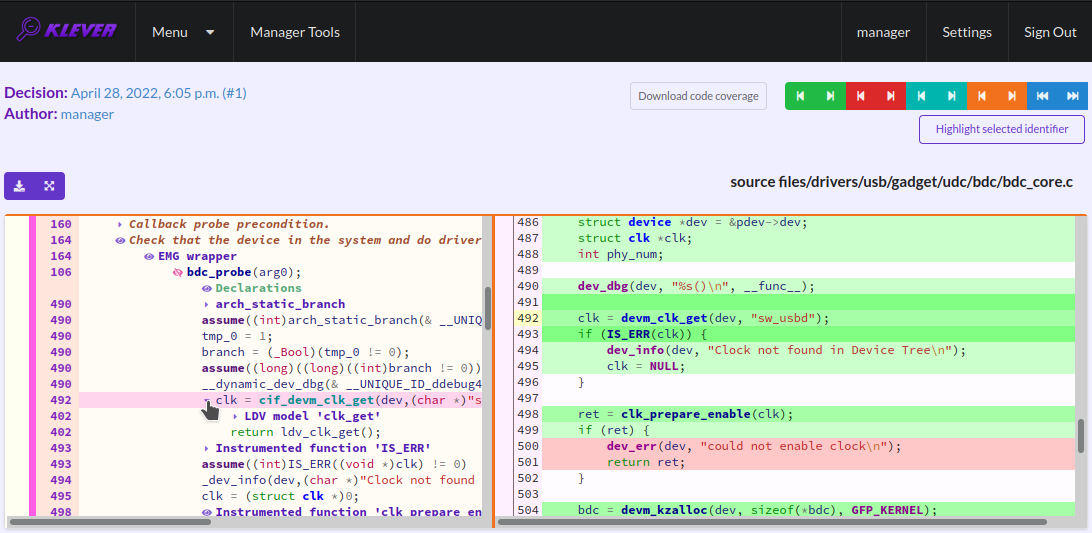

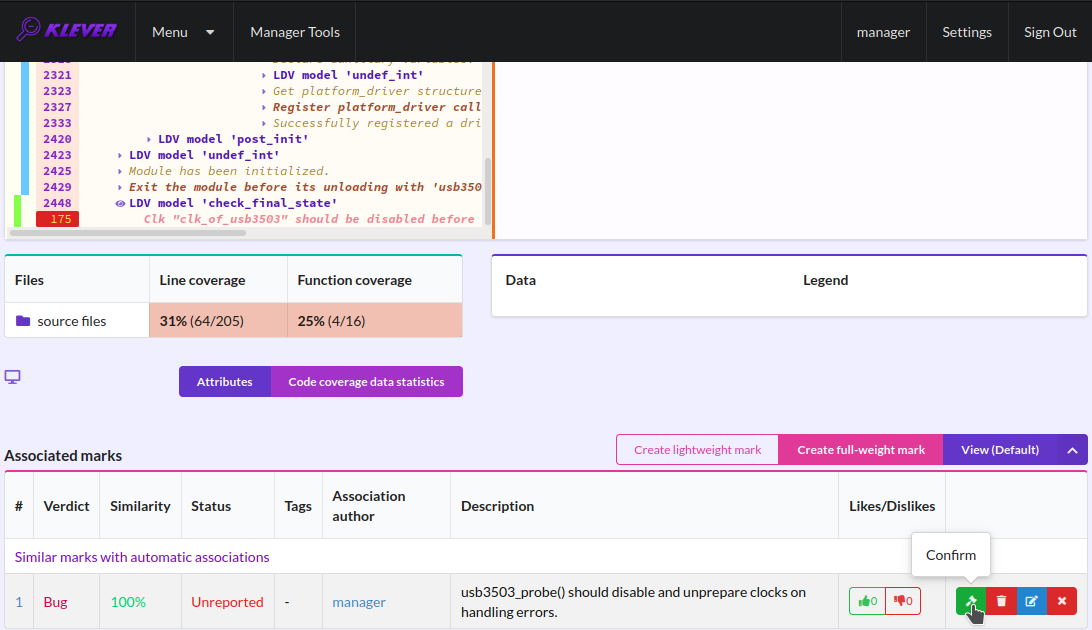

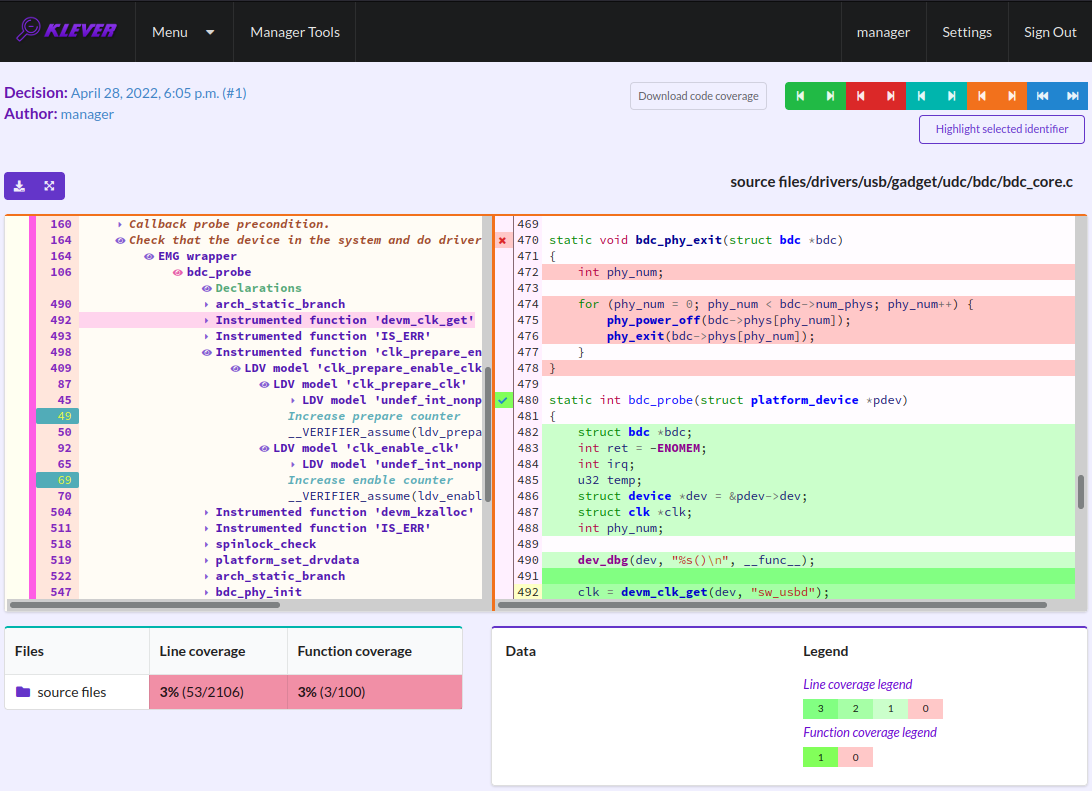

After clicking on links within the list of unsafes like in Fig. 20, you will see corresponding error traces. For instance, Fig. 22 demonstrates an error trace example for module drivers/usb/gadget/udc_bdc_bdc.ko and requirements specification drivers:clk1.

Fig. 22 The error trace for module drivers/usb/gadget/udc/bdc/bdc.ko and requirements specification drivers:clk1

An error trace is a sequence of declarations and statements in a source code of a module under verification and an environment model generated by Klever. Besides, within that sequence there are assumptions specifying conditions that a verification tool considers to be true. Declarations, statements and assumptions represent a path starting from an entry point and ending at a violation of one of checked requirements. The entry point analogue for userspace programs is function main while for Linux loadable kernel modules entry points are generated by Klever as a part of environment models. Requirement violations do not always correspond to places where detected faults should be fixed. For instance, the developer can omit a check for a return value of a function that can fail. As a result various issues, such as leaks or null pointer dereferences, can be revealed somewhere later.

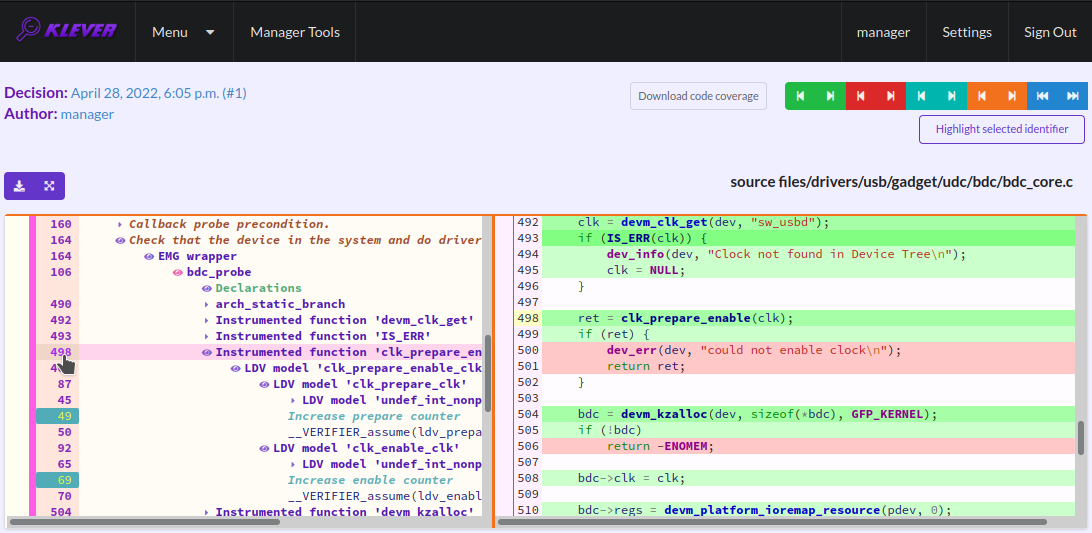

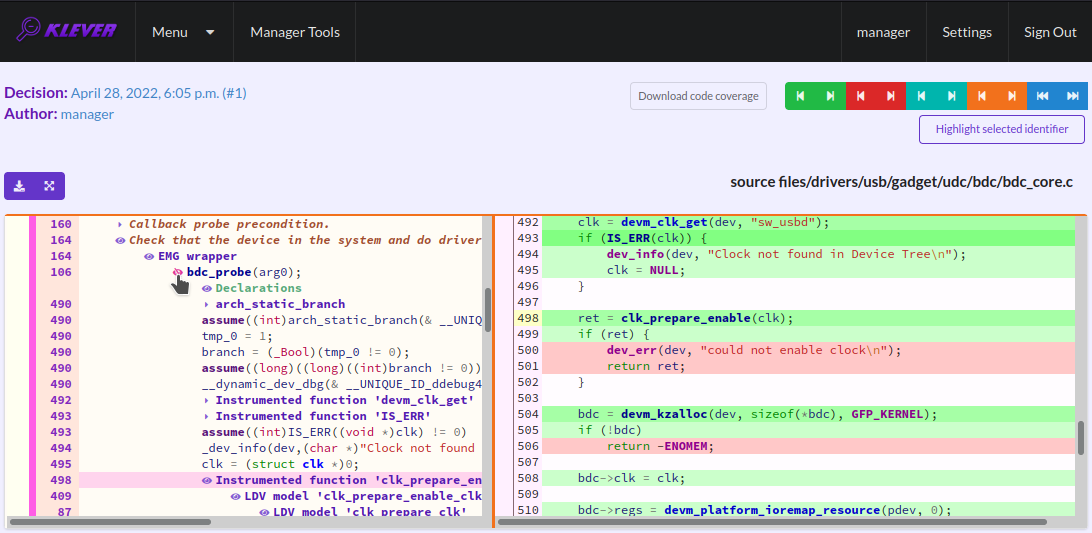

Numbers in the left column correspond to line numbers in source files and models. Source files and models are displayed to the right of error traces. Fig. 22 does not contain anything at the right part of the window since there should be the environment model containing the generated main function but by default models are not demonstrated for users in the web interface5. If you click on a line number corresponding to an original source file, you will see this source file as in Fig. 23. Error traces and source files are highlighted syntactically and you can use cross references for source files to find out definitions or places of usage for various entities.

Fig. 23 Showing the line in the original source file corresponding to the error trace statement

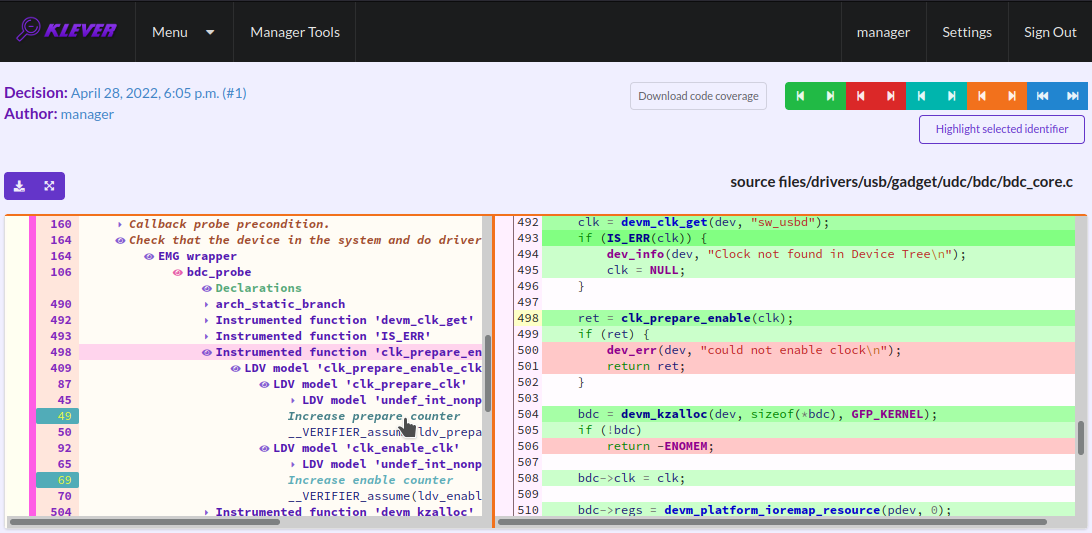

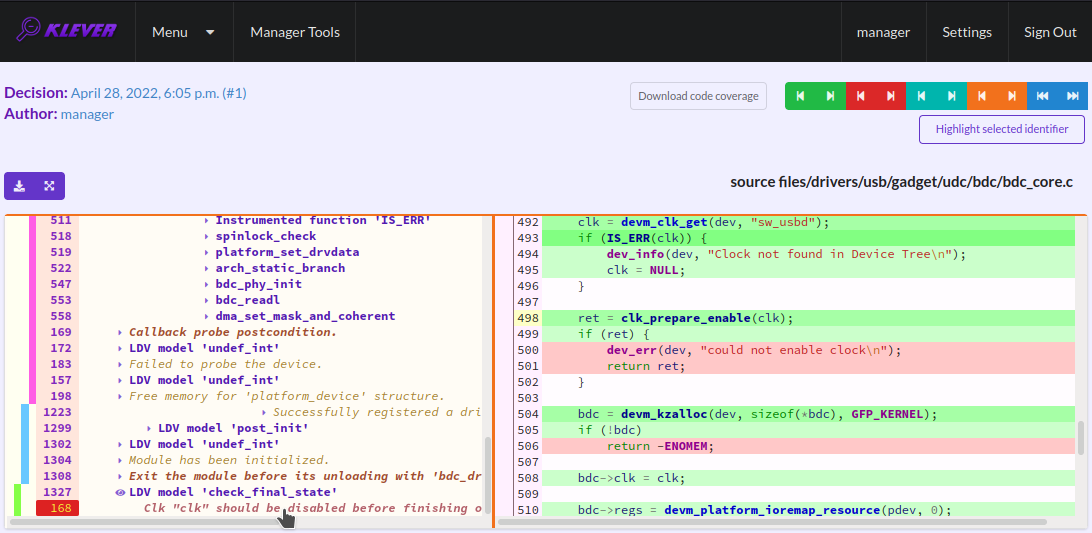

You can click on eyes and on rectangles to show hidden parts of the error trace (Fig. 24-Fig. 25). Then you can hide them back if they are out of your interest. The difference between eyes and rectangles is that functions with eyes have either notes (Fig. 26) or warnings (Fig. 27) at some point of their execution, perhaps, within called functions. Notes describe important actions in models as well as those places in source files that are related to reported requirement violations from the standpoint of the verification tool. Warnings represent places where Klever detects violations of checked requirements.

Fig. 24 Showing hidden declarations, statements and assumptions for functions with notes or warnings

Fig. 25 Showing hidden declarations, statements and assumptions for functions without notes or warnings

Fig. 26 The error trace note

Fig. 27 The error trace warning

You can see that before calling module initialization and exit functions as well as module callbacks there is additional stuff in the error trace. These are parts of the environment model necessary to initialize models, to invoke module interfaces in the way the environment does and to check the final state. This tutorial does not consider models in detail, but you should keep in mind that Klever can detect faults not only directly in the source code under verification but also when checking something after execution of corresponding functions. For instance, this is the case for the considered error trace (Fig. 27).

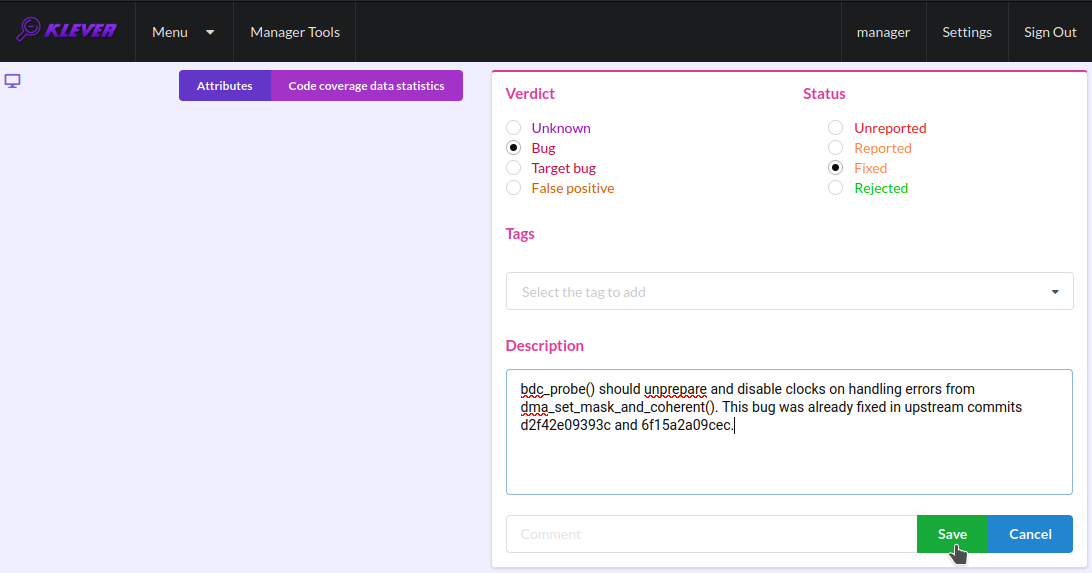

Creating Marks

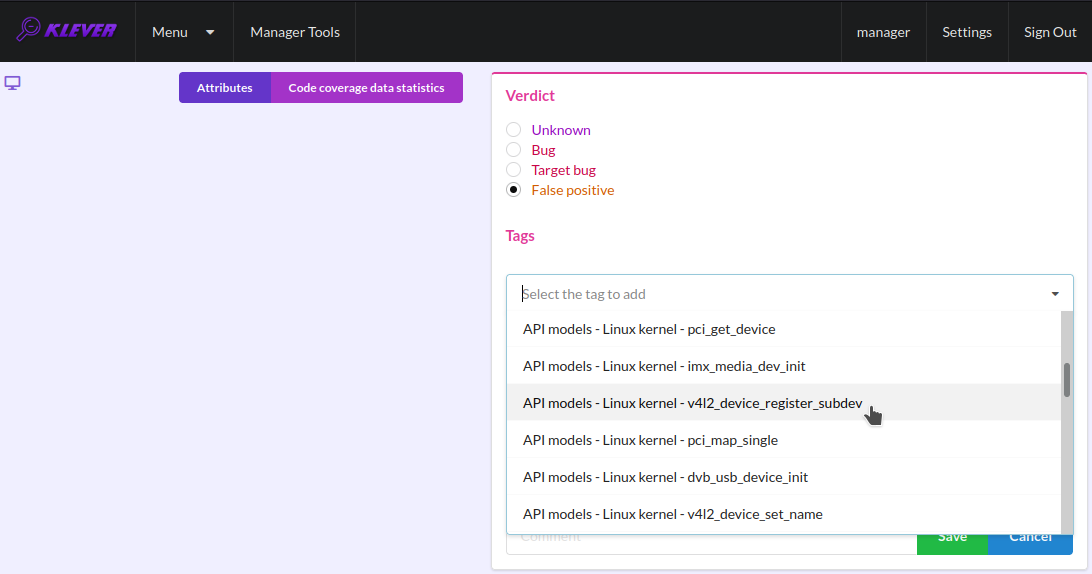

The analyzed unsafe corresponds to the fault that was fixed in upstream commits d2f42e09393c and 6f15a2a09cec to the Linux kernel. To finalize assessment you need to create a new mark (Fig. 28-Fig. 29):

Specify a verdict (Bug in our example).

Specify a status (Fixed).

Provide a description.

Save the mark.

Fig. 28 Starting the creation of a new lightweight mark

Fig. 29 The creation of the new lightweight mark

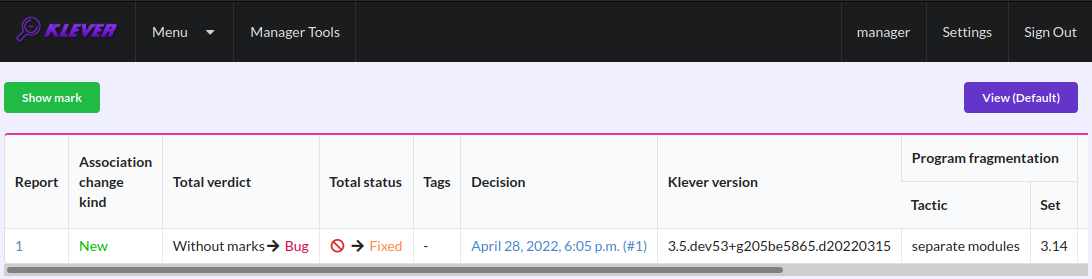

After that you will be automatically redirected to the page demonstrating changes in total verdicts (Fig. 30). In our example there is the only change that corresponds to the analyzed unsafe and the new mark. But in a general case there may be many changes since the same mark can match several unsafes, and you may need to investigate these changes.

Fig. 30 Changes in total verdicts

After creating the mark you can see the first manually assessed unsafe (Fig. 31). Besides, as it was already noted, you should investigate automatically assessed unsafes by analyzing corresponding error traces and marks and by (un)confirming their associations (Fig. 32-Fig. 33).

Fig. 31 The total number of manually assessed unsafes

Fig. 32 Opening the error trace of the unsafe with automatic assessment

Fig. 33 Confirming the automatic association

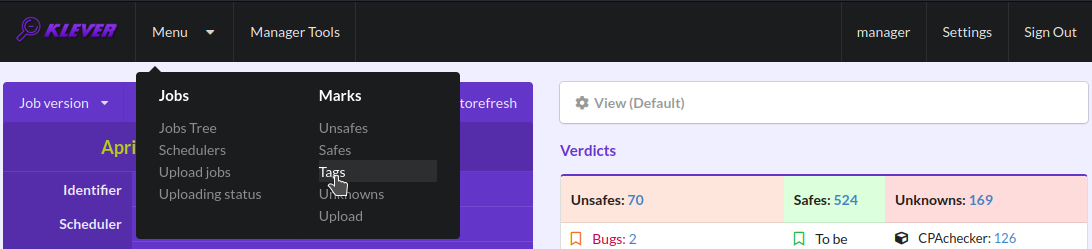

False alarms can happen due to different reasons. You can find a tree of corresponding tags representing most common false alarm reasons at (Fig. 34).

Fig. 34 Opening the tags page

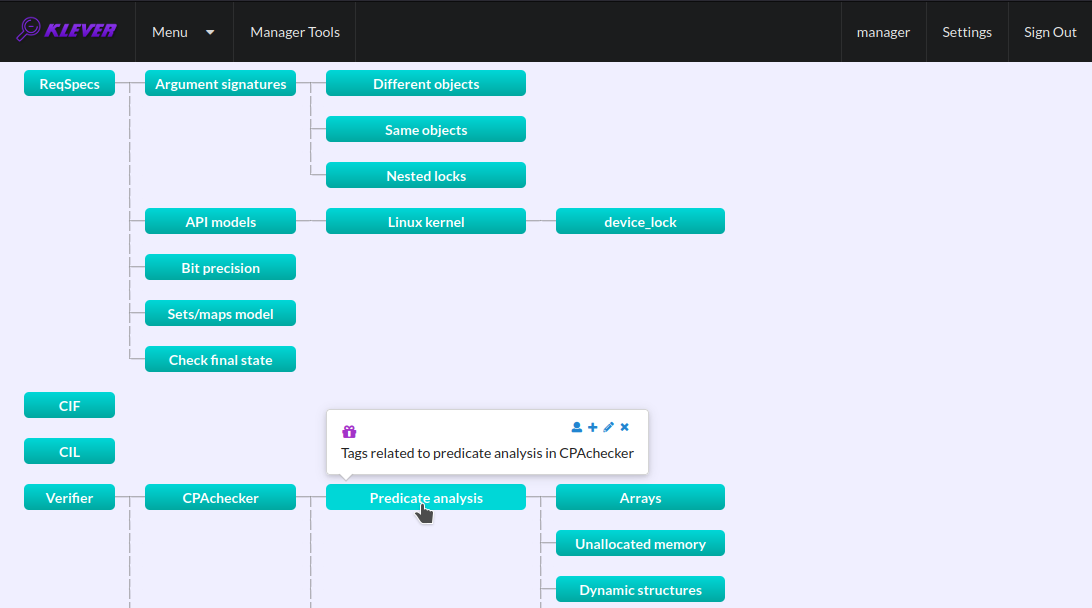

Each tag has a description that is shown when covering a tag name (Fig. 35).

Fig. 35 Showing tag description

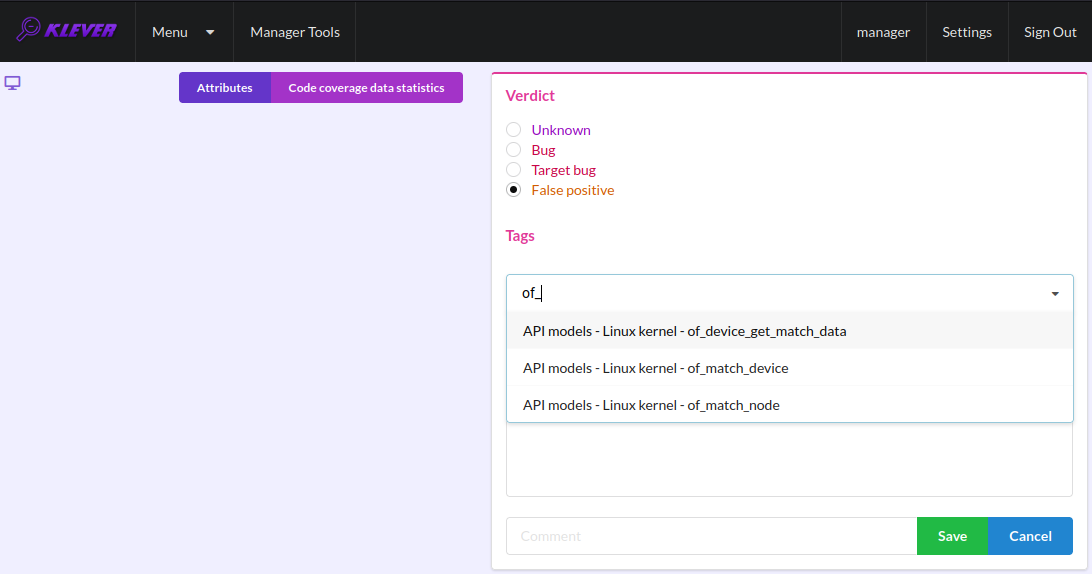

You can choose appropriate tags during creation of marks from the dropdown list (Fig. 36). This list can be filtered out by entering parts of tag names (Fig. 37).

Fig. 36 Choosing tag from the dropdown list

Fig. 37 Entering tag name part

Analysis of Code Coverage Reports

Code coverage reports demonstrate parts (lines and functions at the moment) of the target program source code and models that were considered during verification. Though users can expect complete code coverage because programs are analyzed statically, actually this may not be the case due to incomplete or inaccurate environment models that make some code unreachable or due to some limitations of verification tools, e.g. they can ignore calls of functions through function pointers. When users need good or excellent completeness of verification it is necessary to study code coverage reports.

There is different semantics of code coverage for various verdicts:

Unsafes - code coverage reports show exactly those parts of the source code that correspond to error traces. You will get another code coverage after eliminating reasons of corresponding unsafes.

Safes - code coverage reports show all parts of the source code that the verification tool analyzed. You should keep in mind that there may be different reasons like specified above that prevent the verification tool from reaching complete code coverage. Since Klever lacks correctness proofs (currently, verification tools do not provide useful correctness proofs), analysis of code coverage reports becomes the only approach for understanding whether safes are good or not.

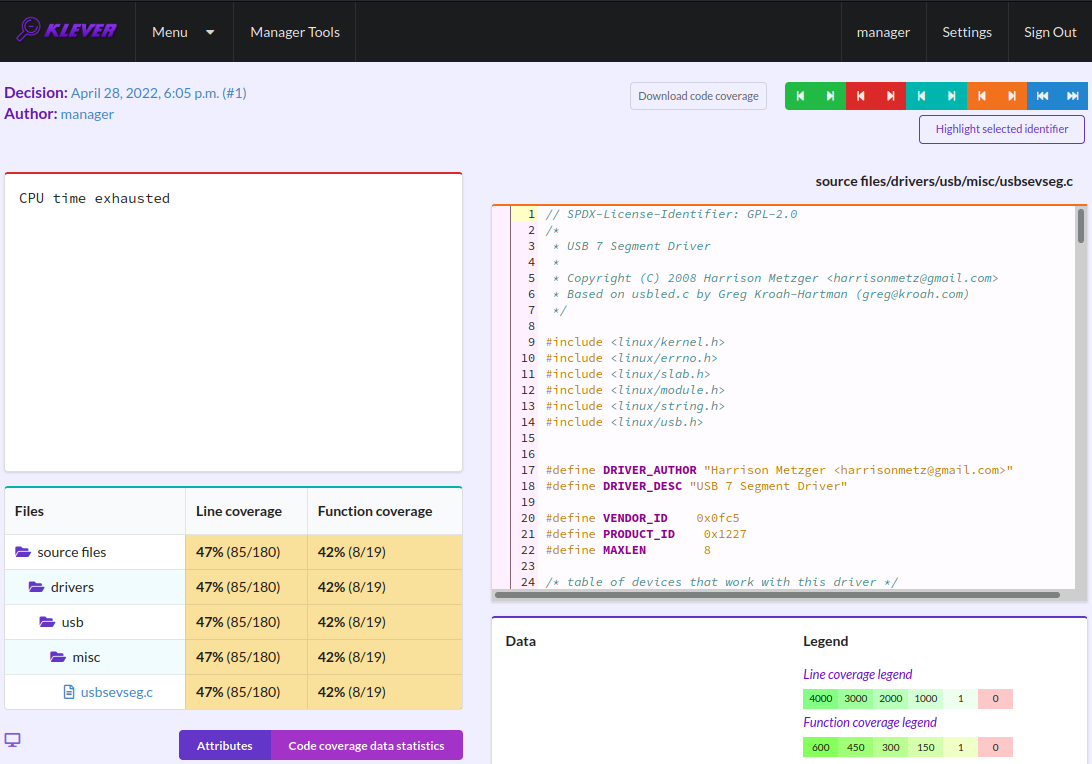

Unknowns (Timeouts) - code coverage shows those parts of the target program source code that the verification tool could investigate until it was terminated after exhausting the specified amount of CPU time. You can find out and change corresponding limits in file tasks.json (for instance, see Fig. 4).

By default, Klever provides users with code coverage reports just for the target program source code. If one needs to inspect code coverage for various models it is necessary to start the decision of the job with a custom configuration where setting “Code coverage details” should be either “C source files including models” or “All source files”. This can result in quite considerable overhead, so it is not always switched on.

Code Coverage Reports for Unsafes

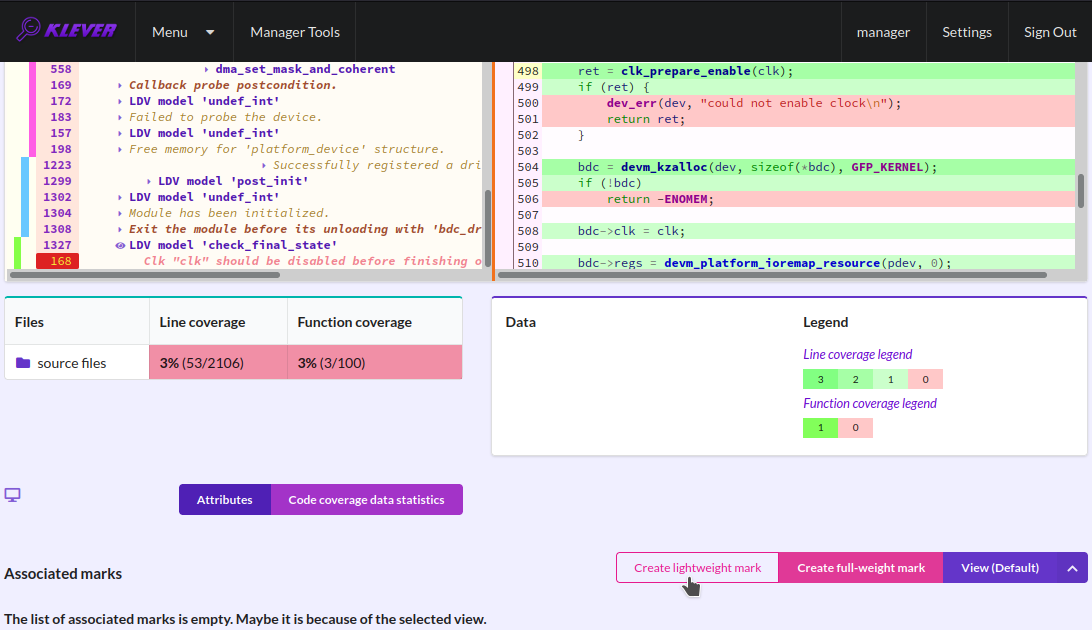

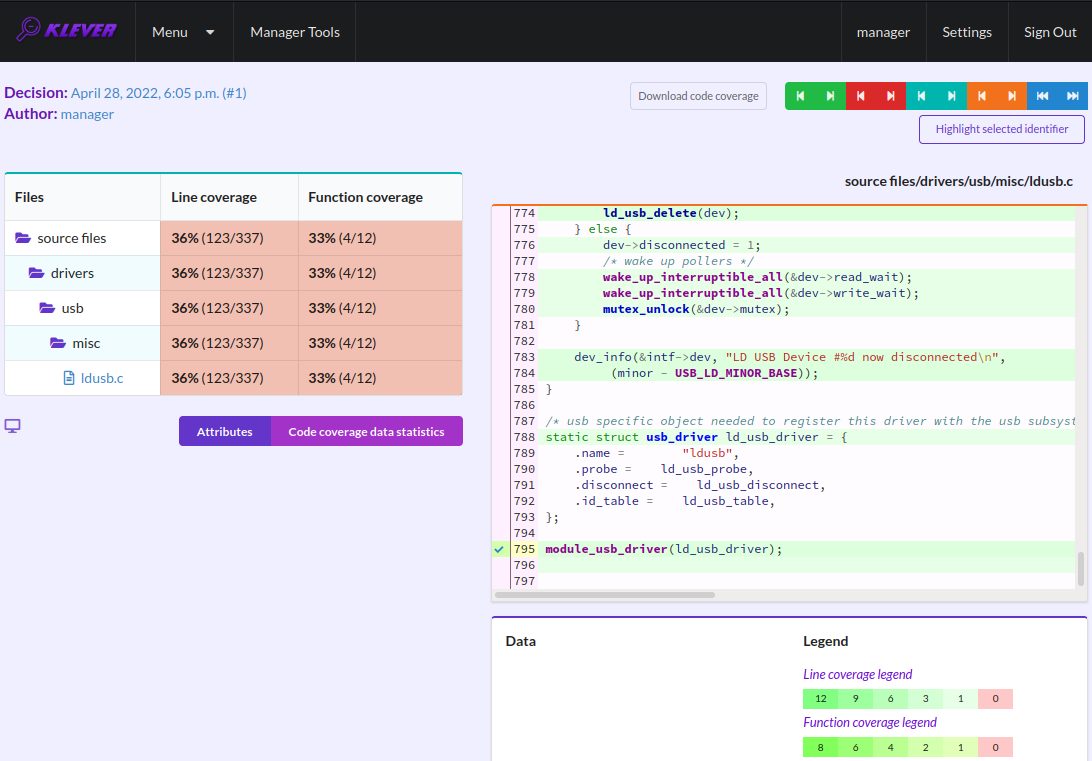

For unsafes, you will see code coverage reports when analyzing corresponding error traces like in Fig. 38. Code coverage of a particular source file is shown on the right. There is a code coverage legend beneath it. The pink background and red crosses point out uncovered lines and functions respectively. More times lines were analyzed during verification more intensive green background is used for them. Green ticks denote covered functions.

Fig. 38 Code coverage report for the unsafe error trace

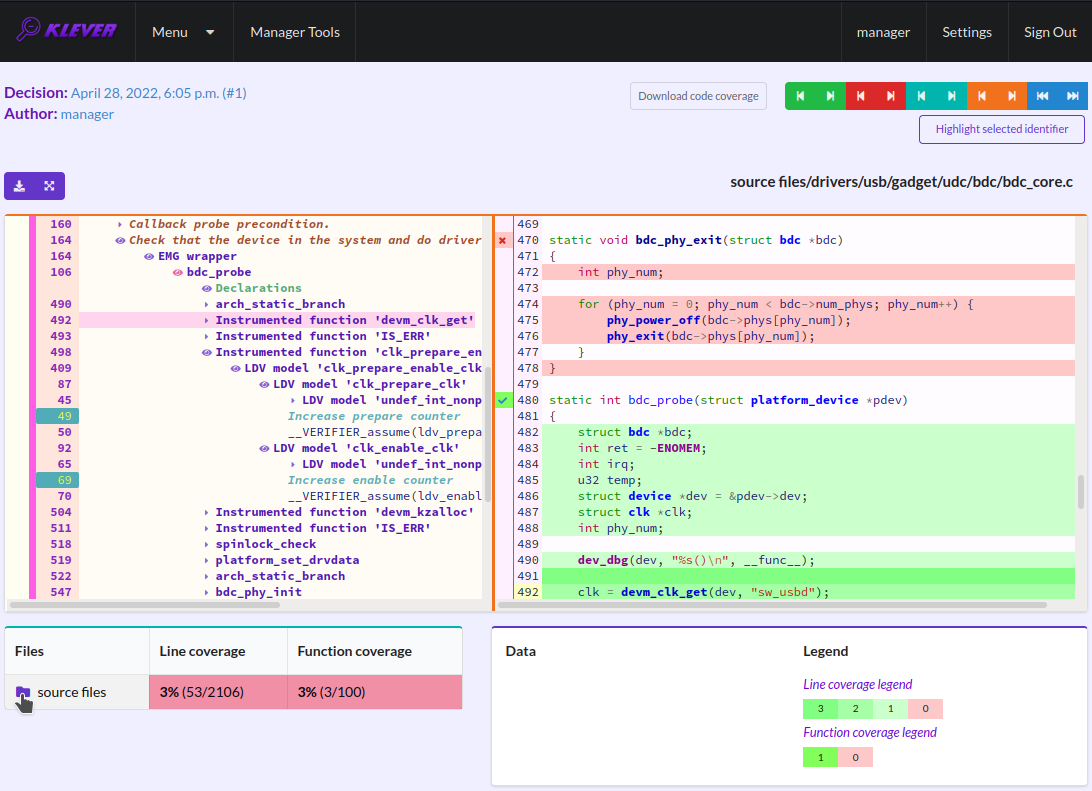

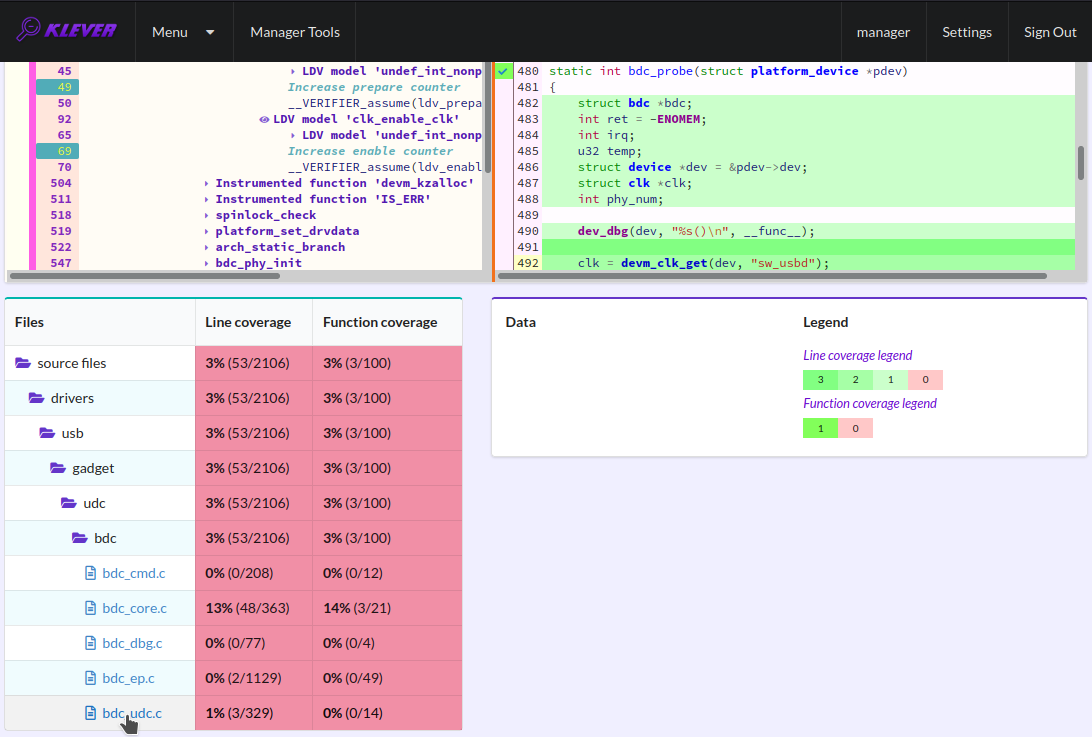

There is code coverage statistics as well as a source tree on the left of the code coverage legend (Fig. 39). You can click on names of directories and source files to reveal corresponding statistics and to show code coverage for these source files (Fig. 40). The latter has sense for tasks consisting of several source files.

Fig. 39 Code coverage statistics

Fig. 40 Opening code coverage for the particular source file

Code Coverage Reports for Safes

To open code coverage reports for safes you need to open a page with a list of safes (Fig. 41) and then open a particular safe page (Fig. 42). Like for unsafe you can analyze the code coverage legend and statistics as well as to show code coverage for particular source files (Fig. 43).

Fig. 41 Opening page with the list of safes

Fig. 42 Opening safe page

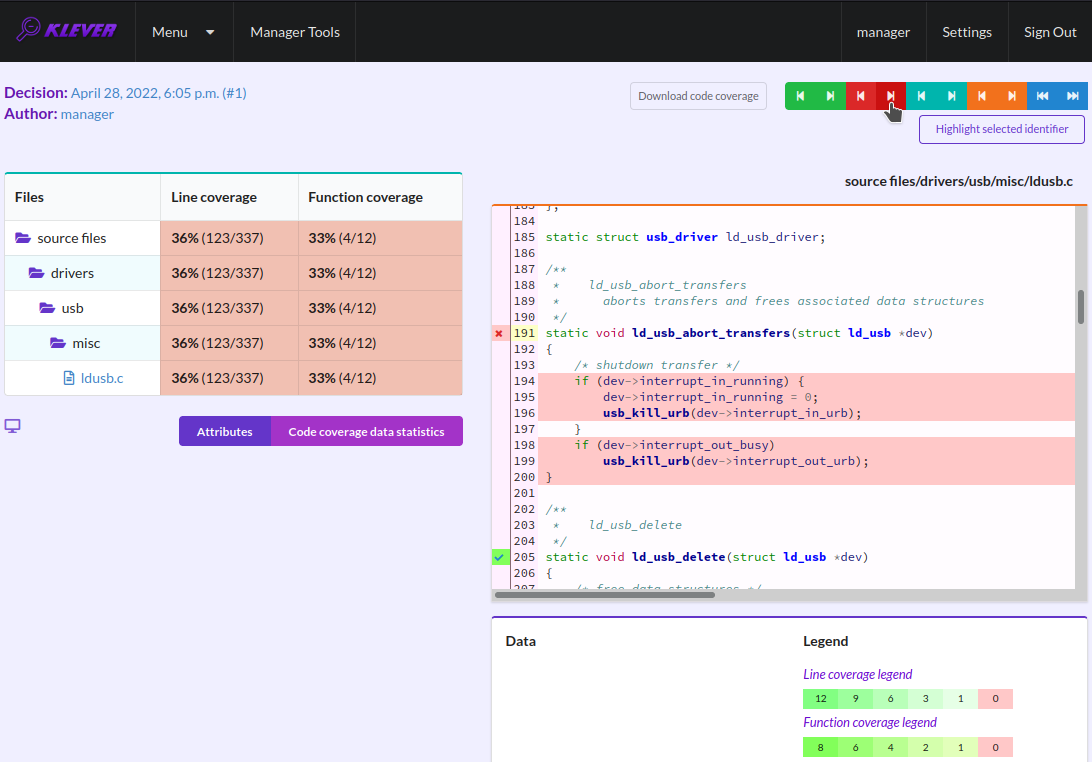

Fig. 43 Code coverage report for the safe

The safe verdict does not imply program correctness since some parts of the program could be not analyzed at all and thus uncovered. To navigate to the next uncovered function you should press the red button with the arrow (Fig. 44). Then you can find places where this uncovered function is invoked and why this was not done during verification (in the considered case this was due to lack of environment model specifications for callbacks of the usb_class_driver structure). Besides, while a function can be covered there may be uncovered lines within it. For instance, this may be the case due to the verification tool assumes that some conditions are always true or false.

Fig. 44 Showing next uncovered function

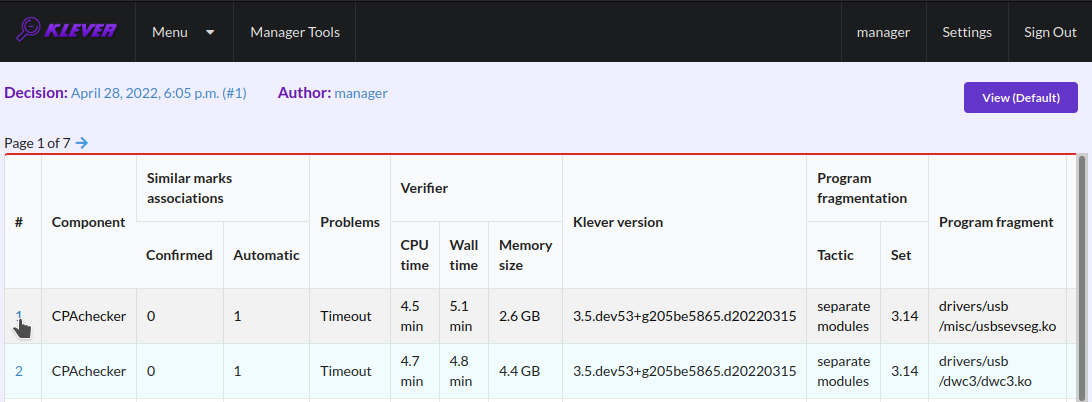

Code Coverage Reports for Unknowns

If you would like to investigate the most complicated parts of the target program source code that can cause unknown (timeout) verdicts, you should open a page with a list of timeouts (Fig. 45) and then open a particular timeout page (Fig. 46). A timeout code coverage report (Fig. 47) looks almost like the safe code coverage report (Fig. 43).

Fig. 45 Opening page with the list of timeouts

Fig. 46 Opening timeout page

Fig. 47 Code coverage report for the timeout

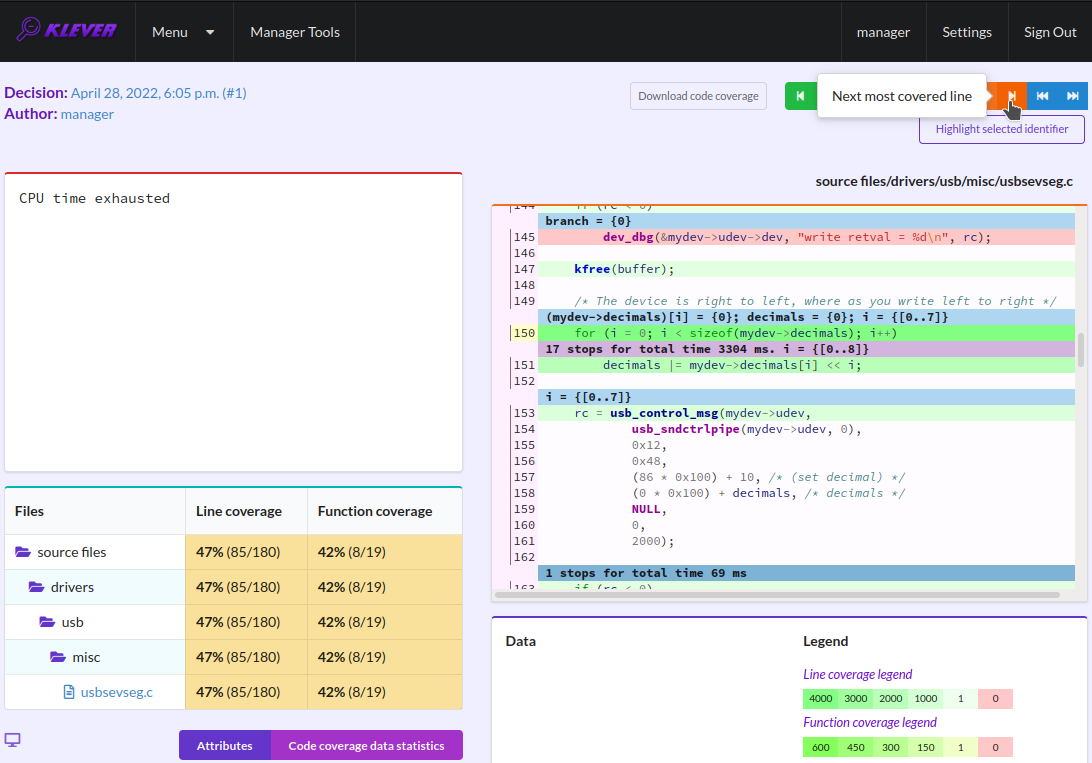

To traverse through most covered lines that likely took most of the verification time you should press the orange button with the arrow (Fig. 48). If the task includes more than one source file it may be helpful for you to investigate lines that are most covered globally. For this it is necessary to press the blue button with the arrow. Quite often loops can serve as a source of complexity especially when loop boundaries are not specified/modelled explicitly.

Fig. 48 Showing next most covered line

You can find more details about verification results and their expert assessment in [G20].

What’s Next?

We assume that you can be unsatisfied fully with a quality of obtained verification results. Perhaps, you even could not obtain them at all. This is expected since Klever is an open source software developed in Academy and we support verification of Linux kernel loadable modules for evaluation purposes primarily. Besides, this tutorial misses many various use cases of Klever. Some of these use cases are presented in other top-level sections of the user documentation. We are ready to discuss different issues and fix crucial bugs.

- 1

If this is not the case, you should adjust paths to build bases below respectively.

- 2

Several build bases are deployed together with Klever by default, but they contain just a small subset of Linux kernel loadable modules. The corresponding Linux kernel version is 3.14.79, target architectures are x86-64, ARM and ARM64.

- 3

You can open the Klever web interface from other machines as well, but you need to set up appropriate access for that.

- 4

For the considered example each task is a pair of a Linux loadable kernel module and a requirements specification. There are 259 modules under verification and 3 requirement specifications to be checked, so there are 777 tasks in total.

- 5

If you want to see these models, you have to start the decision of the job version with a custom configuration (Fig. 4). There you should select value “C source files including models” for option “Code coverage details”. You should keep in mind that this will considerably increase the time necessary for generation of tasks and the overall time of the decision of the job version.

- G20

Gratinskiy V.A., Novikov E.M., Zakharov I.S. Expert Assessment of Verification Tool Results. Proceedings of the Institute for System Programming of the RAS (Proceedings of ISP RAS), volume 32, issue 5, pp. 7-20. 2020. https://doi.org/10.15514/ISPRAS-2020-32(5)-1. (In Russian)